systemctl disable systemd-networkd-wait-online.service systemctl mask systemd-networkd-wait-online.service

After disabling service will still start. Need to mask it

systemctl disable systemd-networkd-wait-online.service systemctl mask systemd-networkd-wait-online.service

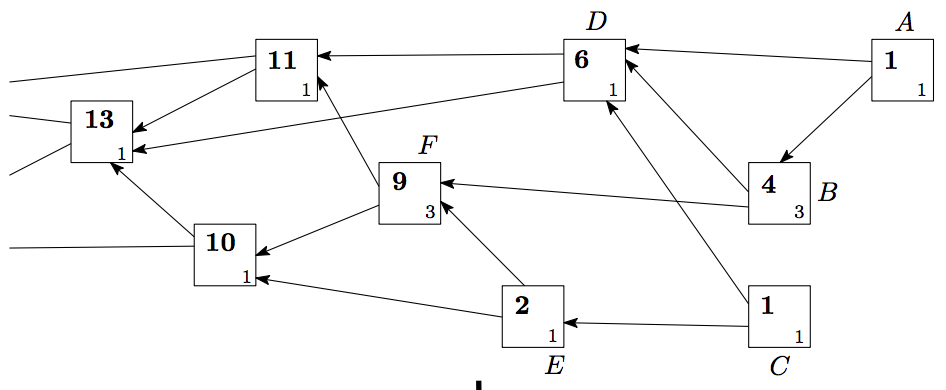

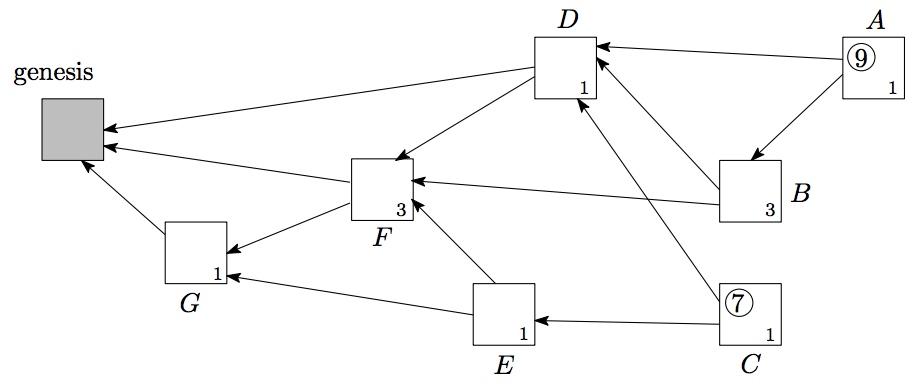

PRE-PREPARE, PREPARE, and COMMIT. The system can tolerate at most of F faulty nodes in a N validator nodes network, where N = 3F + 1.

Before each round, the validators will pick one of them as the

proposer, by default, in a round robin fashion.PRE-PREPARE message.PRE-PREPARE message from the proposer, validators enter the state of PRE-PREPARED and then broadcast PREPARE message. This step is to make sure all validators are working on the same sequence and the same round.2F + 1 of PREPARE messages, the validator enters the state of PREPARED and then broadcasts COMMIT

message. This step is to inform its peers that it accepts the proposed

block and is going to insert the block to the chain.2F + 1 of COMMIT messages to enter COMMITTED state and then insert the block to the chain.

zkver=$(curl http://www.apache.org/dist/zookeeper/stable/ |grep -o 'zookeeper-[0-9].[0-9].[0-9][0-9].tar.gz' |(head -n1 && tail -n1)|sed 's/.tar.gz//')The results:

echo $zkver zookeeper-3.4.10

telnet www.somesite 80 GET /index.html HTTP/1.1 Host: www.somesite

For the ports which are secured, do the following:

openssl s_client -connect www.somesite:443For the server uses self-signed certificate and secured port, do the following:

openssl s_client -connect 12.34.56.78:443 -servername www.somesiteThe servername should be the server name specified in the TLS certificate

git archive --format=tar --prefix=junk/ HEAD | (cd /var/tmp/ && tar xf -)

sudo pip install pep8Then run the following command

pep8 --select=W291,W293 --filename=*.yml *The above command will check trailing white spaces and lines with only white spaces in the files ending with .yml in the current and sub directories.

docker run --name ldap --hostname ldap.fabric-ca -e LDAP_ORGANISATION="Fabric CA" -e LDAP_DOMAIN="fabric-ca" -e LDAP_ADMIN_PASSWORD="ps" -d osixia/openldap:1.1.9The above procedure will enable tls and create server certificate and private, they can be found inside the container at this location:

/container/service/slapd/assets/certsIn the above directory, you can see ldap.crt and ldap.key file. Regardless what hostname or cn you might choose, the container seems will always use the name ldap.crt and ldap.key as the certificate name and key. There will be also ca.crt, but that certificate actually links to following directory which comes with the container.

/container/service/:ssl-tools/assets/default-caTest the container

docker exec ldap ldapsearch -x -H ldap://localhost -b dc=fabric-ca -D "cn=admin,dc=fabric-ca" -w ps

telnet ipaddress port statsFor example:

telnet 172.16.21.3 2181 Trying 172.16.21.3... Connected to 172.16.21.3. Escape character is '^]'. stats Zookeeper version: 3.4.9-1757313, built on 08/23/2016 06:50 GMT Clients: /172.16.21.4:58476[1](queued=0,recved=321,sent=327) /172.16.38.0:55630[1](queued=0,recved=245,sent=245) /172.16.39.0:38124[1](queued=0,recved=240,sent=240) /172.16.21.1:39190[0](queued=0,recved=1,sent=0) Latency min/avg/max: 0/0/14 Received: 807 Sent: 812 Connections: 4 Outstanding: 0 Zxid: 0x100000033 Mode: leader Node count: 31 Connection closed by foreign host.

1. docker exec -it zookeeper1st bash 2. cd /zookeeper-3.4.9/bin/zkCli.sh ls /brokers/ids WatchedEvent state:SyncConnected type:None path:null [1, 2, 3]or

1. docker exec -it kafka3rd bash 2. ./kafka-topics.sh --list --zookeeper zookeeper1st:2181 3. ./kafka-topics.sh --describe --zookeeper zookeeper1st:2181

openssl pkcs8 -topk8 -nocrypt -in tradfile.pem -out p8file.pem

Here is an example.

1. Generate a CA private key

openssl ecparam -genkey -name prime256v1 -noout -out ca.key

2. Convert that key to pkcs8 format (Do not have to do this)

openssl pkcs8 -topk8 -nocrypt -in ca.key -out ca.sk

3. Create certificate for CAopenssl req -x509 -new -SHA256 -nodes -key ca.sk -days 1000

-out ca.crt -subj "/C=US/ST=NC/L=Cary/O=orga/CN=ca.orga"

4. Generate a private key for a server or user and convert to pkcs8 format

openssl ecparam -genkey -name prime256v1 -noout -out server.keyopenssl pkcs8 -topk8 -nocrypt -in server.key -out server.sk (optional)

5. Create a certificate signing request (CSR)

openssl req -new -SHA256 -key server.sk -nodes -out server.csr

-subj "/C=US/ST=NC/L=Cary/O=orga/CN=peer1.orga"

6. Once generated, you can view the full details of the CSR: openssl req -in server.csr -noout -text

7. Now sign the certificate using the CA keys:

openssl x509 -req -SHA256 -days 1000 -in server.csr -CA ca.crt

-CAkey ca.sk -CAcreateserial -out server.crt

sudo nano /etc/dhcp/dhclient.conf

supersede next to it:#supersede domain-name "...."

supersede for append, then add the domain names you wish to search (follow the example below and leave a space after the first "):append domain-name " ubuntu.com ubuntu.net test.ubunut.com"; If you just want to use complete different search domain, then just leave theword supersede, only replace the domain names. supersede domain-name " fabric.com whatever.io" openssl ecparam -list_curves

sect* or secp*. For this tutorial I

choose secp521r1 (a curve over 521bit prime).

openssl ecparam -name secp521r1 -genkey \ -param_enc explicit -out private-key.pem2. Create the self-signed X509 certificate:

openssl req -new -x509 -key private-key.pem \ -out server.pem -days 730The newly created server.pem and private-key.pem are the certificate and the private key, respectively. The

-param_enc explicit tells openssl to embed the full parameters of the curve in the key, as

opposed to just its name. This allows clients that are not aware of the

specific curve name to work with it, at the cost of slightly increasing

the size of the key (and the certificate).openssl ecparam -in private-key.pem -text -noout openssl x509 -in server.pem -text -nooutMost webservers expect the private-key to be chained to the certificate in the same file. So run:

cat private-key.pem server.pem > server-private.pemAnd install

server-private.pem as your certificate. If

you don’t concatenate the private key to the certificate, at least

Lighttpd will complain with the following error:SSL: Private key does not match the certificate public key, reason: error:0906D06C:PEM routines:PEM_read_bio:no start line

apt install openjdk-8-jre-headless2. Create directories named review

mkdir -p ~/review3. Download gerrit.war here, assume the war you are getting is gerrit-2.14.6.war

wget https://www.gerritcodereview.com/download/gerrit-2.14.6.war4. Run the following command from where gerrit war file is:

java -jar gerrit-2.14.6.war init -d ~/review

sudo cp ~/review/bin/gerrit.service /lib/systemd/systemEdit /lib/systemd/system/gerrit.service file. Make sure that the StandardInput=socket is REMOVED:

# StandardInput=socketWithout removing the above line, the service will wait for a socket which we do not really need.

Environment=GERRIT_HOME=/home/ubuntu/review

Environment=JAVA_HOME=/usr/lib/jvm/java-8-oracle/jre

ExecStart=/usr/bin/java -Xmx1024m -jar \

${GERRIT_HOME}/bin/gerrit.war daemon -d ${GERRIT_HOME}

6. Start the gerrit servicessudo systemctl start gerrit.service

[plugins]

allowRemoteAdmin = true

The events-log.jar download location:

https://gerrit-ci.gerritforge.com/job/plugin-events-log-bazel-stable-2.14/lastSuccessfulBuild/artifact/bazel-genfiles/plugins/events-log/events-log.jar

git-review may need to do this

git remote rename origin gerritIf you like to use an existing gerrit.config file, save the following content in the review/etc/gerrit.config file, then run the java command.

[gerrit]

basePath = git

serverId = edb71efd-32fe-48ae-a18b-b287e9f825a5

canonicalWebUrl = http://192.168.56.30:9090/

[database]

type = h2

database = /home/ubuntu/review/db/ReviewDB [index]

type = LUCENE

[auth]

type = DEVELOPMENT_BECOME_ANY_ACCOUNT

[plugins]

allowRemoteAdmin = true

[receive]

enableSignedPush = false

[sendemail]

smtpServer = localhost

[container]

user = ubuntu

javaHome = /usr/lib/jvm/java-8-oracle/jre

[sshd]

listenAddress = *:29418

[httpd]

listenUrl = http://*:9090/

[cache]

directory = cache

refs/*refs/heads/*export GOPATH="/home/ubuntu/hl" export GOROOT="/usr/local/go" PATH="$GOROOT/bin:$GOPATH/bin:$PATH"

APT::Periodic::Update-Package-Lists "0";Then disable the following services:

systemctl disable apt-daily.timer systemctl disable apt-daily.service

if [ -z "$SSH_AUTH_SOCK" ] ; then eval `ssh-agent -s` ssh-add ~/.ssh/interop fi2. Kill the agent when log out by adding the following in .bash_logout:

trap 'test -n "$SSH_AGENT_PID" && eval `/usr/bin/ssh-agent -k`' 0

1.0 'g' stands for 'gRPC' 1.1 'g' stands for 'good' 1.2 'g' stands for 'green' 1.3 'g' stands for 'gentle' 1.4 'g' stands for 'gregarious'

git diff-tree --no-commit-id --name-only -r $(git log -2 --pretty=format:"%h")

sudo apt-get install docker.ioRemove all docker images

docker rmi $(docker images -a -q)Remove all exited containers and dangling images

docker rm $(docker ps -a -f status=exited -q) docker rmi -f $(docker images -f "dangling=true" -q)Stop all running containers

docker stop $(docker ps -a -q)Connect to a running container

docker exec -it ContaineridOrName bashRun a docker container and interact with the container

docker run -it --rm busyboxNormally a regular user can not run docker because of the permissions, the following command assume that a use group named "docker" has been created during the docker install, then you can the following command to add the current logged in user to the docker group, then refresh the group, the current user will be able to manipulate docker as the root user:

sudo gpasswd -a $USER docker newgrp docker

sudo chmod 666 /var/run/docker.sock sudo apt-get update sudo apt-get -y install python-dev python-pip libtool libltdl-dev sudo pip install --upgrade pip sudo pip install behave nose docker-compose protobuf couchdb==1.02.Install golang 1.7 or later from https://golang.org/doc/install by first download a version matching your environment, then run the command below:

tar -C /usr/local -xzf go1.8.1.linux-amd64.tar.gz Do not forget adding the path to your PATH env. PATH="/usr/local/go/bin:$PATH"3.Install docker engine:

sudo apt-get install docker.io4.Create a working directory and setup GOPATH:

mkdir -p ~/gopath/src/github.com/hyperledger export GOPATH=~/gopath5.Extract the source code to direct created in step 4:

git clone http://gerrit.hyperledger.org/r/fabric6.Build fabric binaries:

cd $GOPATH/src/github.com/hyperledger make dist-clean all or make individual target like this: make peer

sudo apt-get update sudo apt-get install python-dev python-pip sudo pip install --upgrade pip sudo pip install behave

wget -q -O - https://pkg.jenkins.io/debian/jenkins.io.key \ | sudo apt-key add -2. Then add the following entry in your /etc/apt/sources.list:

deb https://pkg.jenkins.io/debian binary/3. Run the following two commands to install Jenkins:

sudo apt-get update sudo apt-get install jenkins4. If no errors during the above steps, you can find the generated admin password here:

/var/lib/jenkins/secrets/initialAdminPassword5. Point a browser to the following URL and use the admin password found in step 4 to config Jenkins:

http://<<IP_Address_of_server>>:80806. Select some plugins to install from the Jenkins dashboard.

openssl genrsa -out ca.key 2048

openssl req -x509 -new -nodes -key ca.key \

-subj "/CN=${MASTER_IP}" -days 1000 -out ca.crt

openssl genrsa -out server.key 20484. Use the server.key to generate a Certificate Signing Request "server.csr":

openssl req -new -key server.key -subj "/CN=${MASTER_IP}" \

-out server.csr

openssl x509 -req -in server.csr -CA ca.crt -CAkey ca.key \

-CAcreateserial -out server.crt -days 10000

openssl x509 -noout -text -in ./server.crt

docker run --rm gcr.io/google-containers/busybox Use docker to run an interactive container from gcr

docker run -i -t gcr.io/google-containers/busybox Use kubectl to run an interactive container from gcr kubectl run -i -t tongbusy --image=gcr.io/google-containers/busybox Attach to a running container in a pod. kubectl attach tongbusy-400598208-v0jpg -c tongbusy -i -t Install pypy to coreos wget https://bitbucket.org/squeaky/portable-pypy/downloads/pypy-5.6-linux_x86_64-portable.tar.bz2 tar xfpypy-5.6-linux_x86_64-portable.tar.bz2

Check coreos releases: cat /etc/os-release journalctl -u [unitfile] for example, for a service named kube-apiserver, you should do the following: sudo journalctl -u kube-apiserver Other kubernetes services checking log files: sudo journalctl -u kube-controller-managersudo journalctl -u kube-schedulersudo journalctl -u kubeletsudo journalctl -u kube-proxy

sudo apt-get updatesudo apt-get install python-dev python-pip libssl-dev libffi-dev -ysudo pip install --upgrade pip sudo pip install six==1.10.0sudo pip install shade==1.16.0sudo pip install ansible==2.2.1.0The versions above shows the versions which were used by OpenStack Interop Challenge workload needed versions.